The Problem

The vast volumes of data collected across the clinical trials process offer pharma and biotech the opportunity to harness the information in these big data sets for improved clinical trial design, patient recruitment, site selection, monitoring insights and overall decision making. Harnessing and unlocking the potential in existing data sources, including biomarker, genomic, EHRs, claims data, real-world data and clinical trial data can ultimately lead to improved drug development. It is critical to delineate some of the challenges for big data applications, there are many methodological issues, such as data quality, data inconsistency and instability, limitations of observational studies, validation, analytical issues, and legal issues. An effort to improve the data quality of electronic health records is necessary.

Key areas where the problem occurs:

- Handling high volume data,

- Shortage and management of data scientists,

- Real-time collection of dynamic data,

- getting real-time Insights,

- Data Governance and Security,

- Wastage of resources and drug,

- Expensive manual process,

- Too many options in Bigdata,

- Troubles of upscaling’s and more of the challenges that we face.

Big data analytics need to be integrated into clinical practice to reap substantial benefits.

Solutions

- Currently, 80% of all healthcare information is unstructured data. Big data will make Data storage less expensive and more available. With the help of the cloud, it will become easier to handle large amounts of genetic’s data for research and the challenge of interpreting many patient-related parameters in attempts to personalize treatment.

- Big data analytics is able to analyze monitoring data in an intensive care unit. In current practice, the continuous data stream generated by monitors of vital signs and mechanical ventilators is of limited use to clinicians since real-time, complex analyses are often not possible.

- A clinical-decision support user interface which provides results from real-time, complex analyses. The aim is that the analytics enable identification of ineffective efforts of mechanically ventilated patients, catheter-related bloodstream infection, suboptimal nutrition adherence and deterioration of the patient.

- Hadoop delivers value with optimal health resource utilization across different patient cohorts, a holistic view of cost/quality tradeoffs, analysis of treatment pathways, competitive pricing studies, concomitant medication analysis, clinical trial targeting based on geographic & demographic prevalence of disease, prioritization of pipelined drug candidates, metrics for performance-based pricing contracts, drug adherence studies, and permanent data storage for compliance audits.

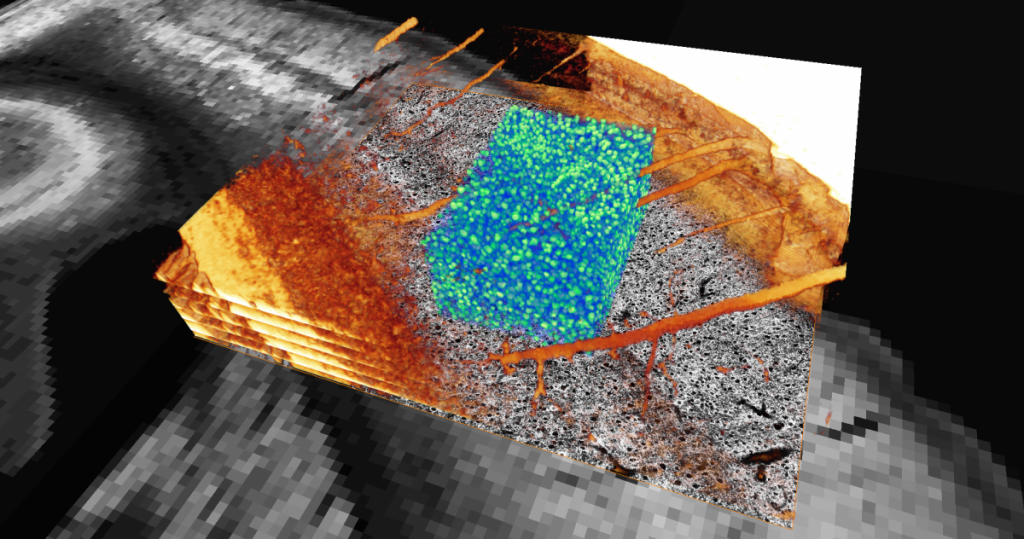

- In the electronic health record, data is constantly collected but not exploited for offering personalized care. Develop an ability to improve and develop predictive analytics for personalizing care but also to generate heatmaps and classification pyramids for specific patient populations in a hospital.

- Minimizing Waste Across the Drug Manufacturing Process, empowers researchers, clinicians, and analysts to unlock insights from translational data to drive evidence-based medicine programs.

Big data addresses several challenges but there are still many issues to overcome before the benefits of big data analytics can be maximized. It is therefore important for those that aim to develop big data analytics to collaborate and learn from other solutions. Most of the big data initiatives and commercial entities address challenges of the big data value chain, such as the aggregation of data and real-time visualization of risk factors, in their own unique way.

Our Expertise

We have Expertise in Risk Analysis, Pharmacogenomics, Real World Data / Evidence, Clinical Fraud/ Detection, Modelling Life Cycle Management, Identify New Potential Drug Candidates, Monitoring Patterns, Causes, and Effects, Predictive Analysis, Sentiment Analysis, Patient Identification, Data analytics, and enrolment modeling.

Experience with relational and non-relational database systems is a must. Examples Mysql, Oracle, DB2 and Hbase, HDFS, MongoDB, CouchDB, Cassandra, Teradeta, etc.

We have flawless:

- Understanding and familiarity with frameworks such as Apache Spark, Apache Storm, Apache Samza, Apache Flink and the classic MapReduce and Hadoop.

- Command over programming Languages like– R, Python, Java, C++, Ruby, SQL, Hive, SAS, SPSS, MATLAB, Weka, Julia, Scala. As you can not knowing a language should not be a barrier for a big data scientist

- Business Knowledge of domain.

What do we deliver?

- Web Apps, Mobile Apps, Custom Software

- SaaS, DaaS

- Real-time data-driven platforms, dashboards

- The report, API, Tools

- Support, maintenance, and troubleshooting

Our Services Include:

- Scalability: We need to take care of the storage capacity and the computing power to enhance scalability.

- Availability for 24 hours

- Performance

- Tailored, flexible and pragmatic solutions

- Full transparency

- High-quality documentation

- A healthy balance between speed and accuracy

- A team of experienced quantitative profiles

- Privacy

- Fair pricing

Technology Stack:

- Big Data Processing Tools: Hadoop distributed file system (HDFS) and a number of related components such as Spark, Scala, Apache, Hive, HBase, Oozie, Pig, and Zookeeper

- Big Data Analysis Tools: Hadoop and MapReduce, Apache Spark, Dryad, Storm, Apache Drill, Jaspersoft, Splunk

- Programming: Python, R, Java

- Stack: MEAN, MERN, Full-Stack

- Data Models: Data Lake, Data Modeling, Data Integration, Data Platforms, Data ETL

- Analytics Models: Statistical Models, Machine Learning Models, AI Model, Deep Learning Models

- BI: Tableau, Power BI, Elastic Search, Kibana